EvoBot

At the beginning of the project, ITU discussed the main requirements of the next version of the robot with the rest of the consortium. Based on all this feedback, a specification document was produced. Analysing all the requirements that our partners demanded, it was soon evident that a complete redesign of the previous robotic platform was needed. Therefore, ITU designed a new robot from scratch in order to achieve a flexible and versatile robotic platform. The new robot is called EvoBot. Its design is open source and people interested in building one can find the detail instructions at the repositories of the project (https://bitbucket.org/afaina/evobliss-software and https://bitbucket.org/afaina/evobliss-hardware) or in the DIY community Thingiverse (https://www.thingiverse.com/thing:2776125).

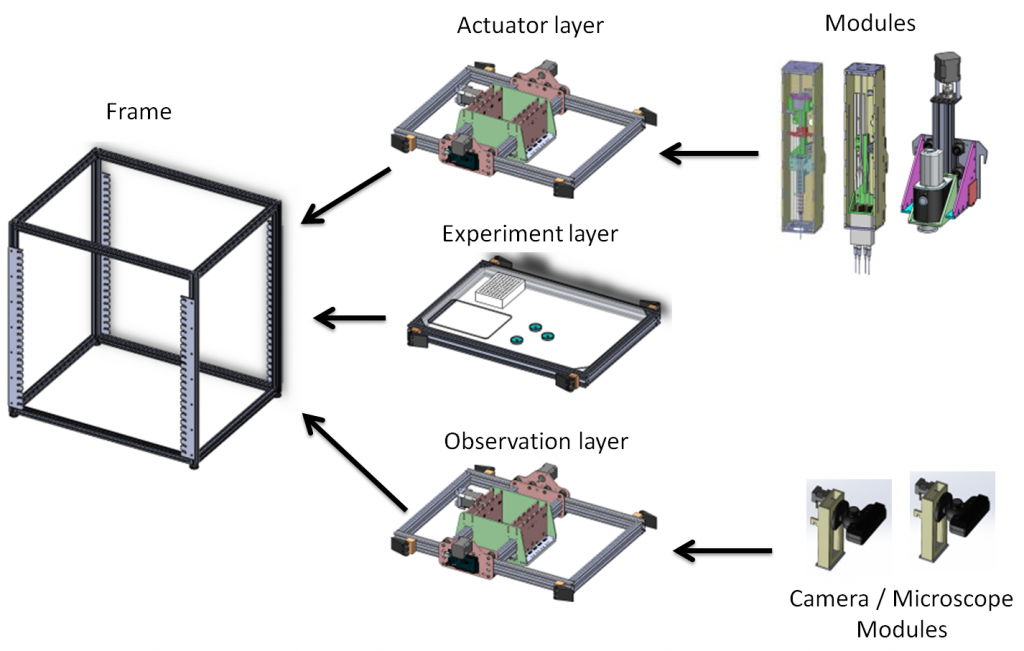

In order to reach a reconfigurable and extendible liquid handling robot, we have employed a modular design building on research in the field of modular robotics. A modular approach allows us to encapsulate complexity while providing a simple plug’n’play interface for users. Furthermore, this allows non-expert users to configure the robot for a wide range of experiments. To further extend the versatility of the robot, it has two different levels of modularity (see Figure 1). Thus, the robot consists of one structural frame with horizontal sockets, where different kinds of layers are attached by using a cam lever mechanism. These layers can be moved up and down in a few seconds. In its basic configuration the robot has three layers: the top is actuation, the middle is the experiment, and the bottom is observation. This default configuration can be easily changed if required by the experiment.

The second level of modularity is provided by modules that can be plugged into the layers. These modules add new functionality to the system. Currently, five different versions of syringe modules are available (syringe sizes from 100ul to 20ml), a dispense module to pump up to four different liquids through peristaltic pumps and a heavy payload module which is able to move up and down heavy sensors like an OCT scanner or a paste extruder.

Standalone robot

EvoBot can be programmed through a computer by using a set of Python functions or API. In order to extend EvoBot´s functionality, we have added a standalone controller based on a Raspberry Pi 3. This embedded computer was chosen due to its powerful Graphical Processing Unit, GPU, and a Camera Serial Interface Type 2 (CSI-2) which can be used to process video in real time. The standalone controller also allows us to provide all necessary software and thus eliminate the need for users to manage and update the software. Furthermore, the dedicated controller implements a web server that makes it possible to monitor experiments remotely, which is important for long running experiments.

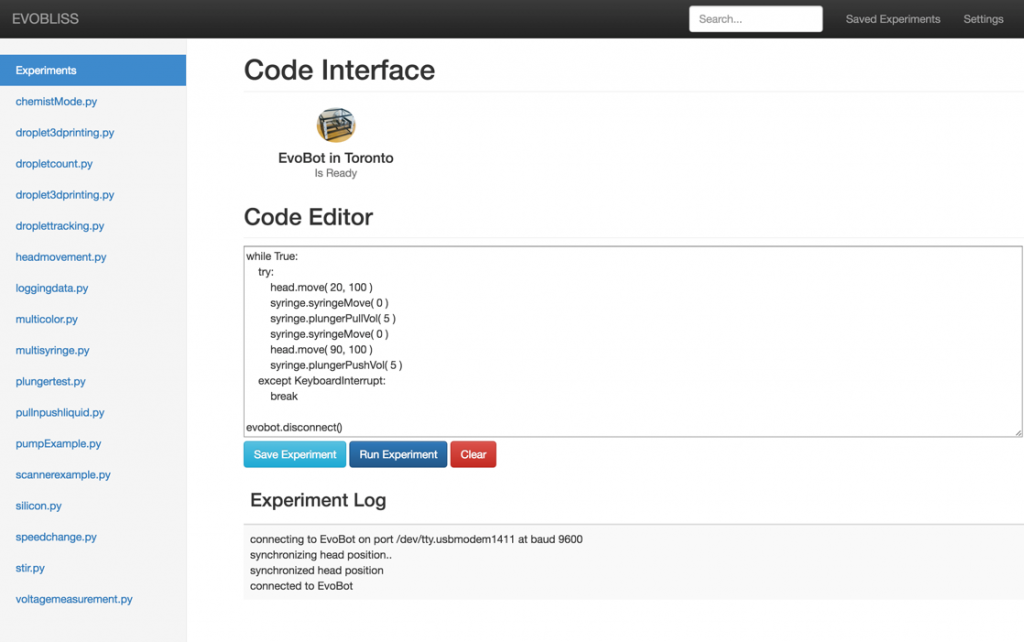

In addition to the standalone controller, we have developed several Graphical User Interfaces. As an example, the coding web interface, as can be seen in Figure 2, is a webpage, which allows users to write Python code that will then be run on EvoBot. The users can write Python code in the code editor, as if they were going to run it on a desktop computer. They can either run the experiment on the robot or save it for later use. The project navigator on the left provides access to built-in examples provided in EvoBot’s repository. The users can browse files using the menu on the left, and can select them to use as is, or to modify them based on their needs.

Another type of interface we developed, allows chemists or biologists to use the robot without any programming. This can be done through an iPad interface with drag and drop gestures or by a protocol-based interface, which employs two steps to define the experiments: a first step where the users select the vessels and another step where the liquid handling operations are specified.

Evolutionary experiments

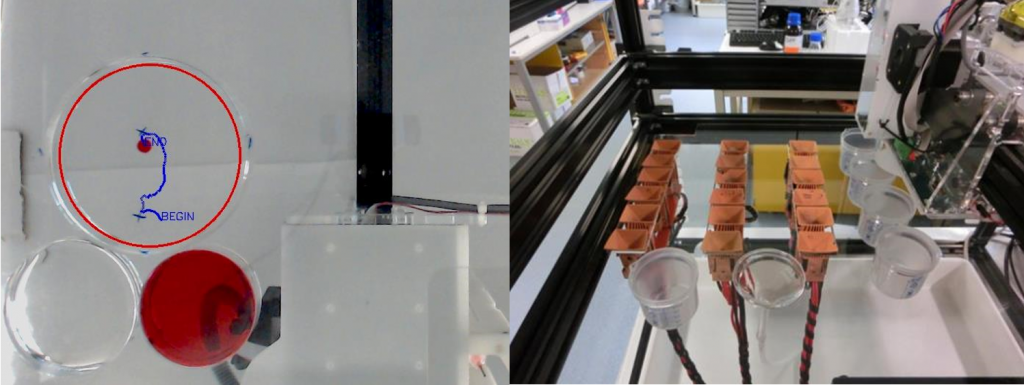

EvoBot has been used in different scientific experiments, where it was able to run for long periods of time uninterrupted. Specifically, we used evolutionary algorithms to optimize biological and chemical process. In one of these experiments, EvoBot performs different combinations of pH and concentrations of decanoate to optimize the chemotaxis of oil droplets (1-decanol) towards salt gradients. After mixing all the reagents to achieve the right pH and concentration, the robot produces a red droplet at one side of a Petri dish and salt on the other side. Then, the camera of the robot is used to track the movement of the oil droplet and generates a score or fitness based on the degree to which the droplet moves along the salt gradient, Figure 3 (left). By iteratively choosing the best solutions, variating them and evaluating them, the algorithm is able to discover droplets that move towards the salt gradient. This experiment allowed us to discover chemotactic movements at pH 7, which could be useful in biological systems.

Similarly to the previous experiment, we used evolutionary algorithms to improve the power output of MFCs, see Figure 3 (right). In this case, the parameter to optimize is a recipe composed of three different reagents (casein, acetate and urine). The robot mixes the three liquids to generate the recipes and feeds the MFCs with them. Then, it records the voltage of the MFCs for 4 hours and after that the robot produces a score for each recipe based on these voltages. This experiment for the very first time showed the interaction between evolutionary algorithms and robotic system assisted microbial fuel cells, which resulted in novel observations about automated feeding regimes and power output responses.

Important results

The most important results of WP2 can be summarized in the following points:

- We have designed an open-source general-purpose lab automation robot based on a modular approach. The numerous modules developed provide enough functionality for a great variety of tasks.

- Seven copies of the robot were built and distributed to the partners of the project, which employed them to carry on different scientific experiments

- EvoBot was able to run complex optimization problems in chemical and biological systems by using evolutionary algorithms.

- A standalone controller in the robot allowed users to run programs without any computer and the controlling of the robot can be done through a webpage.